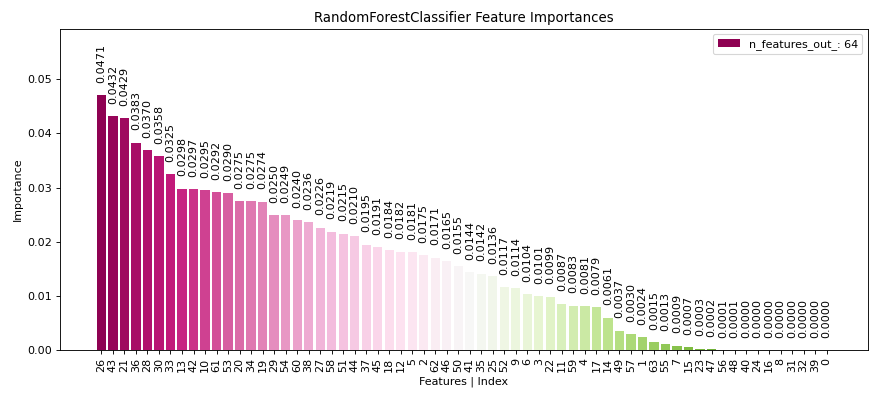

plot_feature_importances#

- scikitplot.api.estimators.plot_feature_importances(estimator, *, feature_names=None, class_index=None, threshold=None, title='Feature Importances', title_fontsize='large', text_fontsize='medium', cmap='PiYG', order=None, orientation='vertical', x_tick_rotation=None, bar_padding=11, digits=4, display_bar_label=True, **kwargs)[source]#

Generate a plot of a sklearn model’s feature importances.

This function handles different types of classifiers and their respective feature importances (

feature_importances_) or coefficient (coef_) attributes, if not provide its compute sklearn permutation importances. It supports models wrapped in pipelines.Supports models like:

- Parameters:

- estimatorfitted estimator object

Fitted classifier or a fitted

Pipelinein which the last estimator is a classifier.- feature_nameslist of str, optional, default=None

List of feature names corresponding to the features. If None, feature indices are used.

- class_indexint, optional, default=None

Index of the class of interest for multi-class classification. Defaults to None.

- thresholdfloat, optional, default=None

Threshold for filtering features by absolute importance. Only features with an absolute importance greater than this threshold will be plotted. Defaults to None (plot all features).

- titlestr, optional, default=’Feature Importances’

Title of the generated plot.

- title_fontsizestr or int, optional, default=’large’

Matplotlib-style fontsizes. Use e.g. “small”, “medium”, “large” or integer-values.

- text_fontsizestr or int, optional, default=’medium’

Matplotlib-style fontsizes. Use e.g. “small”, “medium”, “large” or integer-values.

- cmapNone, str or matplotlib.colors.Colormap, optional, default=’PiYG’

Colormap used for plotting. Options include ‘viridis’, ‘PiYG’, ‘plasma’, ‘inferno’, etc. See Matplotlib Colormap documentation for available choices. - https://matplotlib.org/stable/users/explain/colors/index.html

- order{‘ascending’, ‘descending’, None}, optional, default=None

Order of feature importance in the plot. Defaults to None (automatically set based on orientation).

- orientation{‘vertical’ | ‘v’ | ‘y’, ‘horizontal’ | ‘h’ | ‘y’}, optional

Orientation of the bar plot. Defaults to ‘vertical’.

- x_tick_rotationint, optional, default=None

Rotates x-axis tick labels by the specified angle. Defaults to None (automatically set based on orientation).

- bar_paddingfloat, optional, default=11

Padding between bars in the plot.

- digitsint, optional, default=4

Number of digits for formatting AUC values in the plot.

- display_bar_labelbool, optional, default=True

Whether to display the bar labels.

Added in version 0.3.9.

- **kwargs: dict

Generic keyword arguments.

- Returns:

- axmatplotlib.axes.Axes

The axes on which the plot was drawn.

- Other Parameters:

- axmatplotlib.axes.Axes, optional, default=None

The axis to plot the figure on. If None is passed in the current axes will be used (or generated if required).

Added in version 0.4.0.

- figmatplotlib.pyplot.figure, optional, default: None

The figure to plot the Visualizer on. If None is passed in the current plot will be used (or generated if required).

Added in version 0.4.0.

- figsizetuple, optional, default=None

Width, height in inches. Tuple denoting figure size of the plot e.g. (12, 5)

Added in version 0.4.0.

- nrowsint, optional, default=1

Number of rows in the subplot grid.

Added in version 0.4.0.

- ncolsint, optional, default=1

Number of columns in the subplot grid.

Added in version 0.4.0.

- plot_stylestr, optional, default=None

Check available styles with “plt.style.available”. Examples include: [‘ggplot’, ‘seaborn’, ‘bmh’, ‘classic’, ‘dark_background’, ‘fivethirtyeight’, ‘grayscale’, ‘seaborn-bright’, ‘seaborn-colorblind’, ‘seaborn-dark’, ‘seaborn-dark-palette’, ‘tableau-colorblind10’, ‘fast’].

Added in version 0.4.0.

- show_figbool, default=True

Show the plot.

Added in version 0.4.0.

- save_figbool, default=False

Save the plot.

Added in version 0.4.0.

- save_fig_filenamestr, optional, default=’’

Specify the path and filetype to save the plot. If nothing specified, the plot will be saved as png inside

result_imagesunder to the current working directory. Defaults to plot image named to usedfunc.__name__.Added in version 0.4.0.

- overwritebool, optional, default=True

If False and a file exists, auto-increments the filename to avoid overwriting.

Added in version 0.4.0.

- add_timestampbool, optional, default=False

Whether to append a timestamp to the filename. Default is False.

Added in version 0.4.0.

- verbosebool, optional

If True, enables verbose output with informative messages during execution. Useful for debugging or understanding internal operations such as backend selection, font loading, and file saving status. If False, runs silently unless errors occur.

Default is False.

Added in version 0.4.0: The

verboseparameter was added to control logging and user feedback verbosity.

Examples

>>> from sklearn.datasets import load_digits as data_10_classes >>> from sklearn.model_selection import train_test_split >>> from sklearn.ensemble import RandomForestClassifier >>> import scikitplot as skplt >>> X, y = data_10_classes(return_X_y=True, as_frame=False) >>> X_train, X_val, y_train, y_val = train_test_split( ... X, y, test_size=0.5, random_state=0 ... ) >>> model = RandomForestClassifier(random_state=0).fit(X_train, y_train) >>> skplt.estimators.plot_feature_importances( >>> model, >>> orientation='y', >>> figsize=(11, 5), >>> );

(

Source code,png)